In the first post of these series, we talked about how we can spin up a Vault server and auto-unseal it so we can perform operations on its stored data. In this second post, we’ll discuss one of the ways a server can automatically authenticate to Vault without any human intervention.

Suppose you have a web application that needs an API token stored in Vault. If we were authenticating a human to the Vault server, we could use login and a password, and we can either memorize this password or store it in a local secrets manager such as 1Password. But in the case of a machine, we can’t just store this as plaintext somewhere, it would defeat the purpose of Vault. Sure, you can encrypt it, but then where do you keep this encryption key?

You could also use an encryption service such as AWS KMS or GCP KMS. But suppose now that your application is in a cluster that scales up and down. How do the new machines get the token they need? If there is a compromise of a secret we need to be able to audit and know where the leak came from. If tokens are shared between these machines, this becomes much much harder. How do we get credentials that are temporary and unique to that instance?

Vault supports highly pluggable mechanisms or “backends,” and one of the most important ones is the authentication backend. It integrates with the underlying platform where your application is running and trusts its notion of identity. The major benefit of this is that the cloud provider already did the hard work for us of providing credentials and identity documents to things running and their authentication protocols are thoroughly battle tested. This means we can piggyback on their systems to validate the source of login requests.

There are two authenticating methods for AWS, ec2 and iam, and two for GCP, gce and iam. The methods in the two clouds are analogous, so in this blog post, I’ll walk through the AWS examples. You can find the full AWS code examples here and the GCP-specific code examples here.

Vault AWS EC2 auth

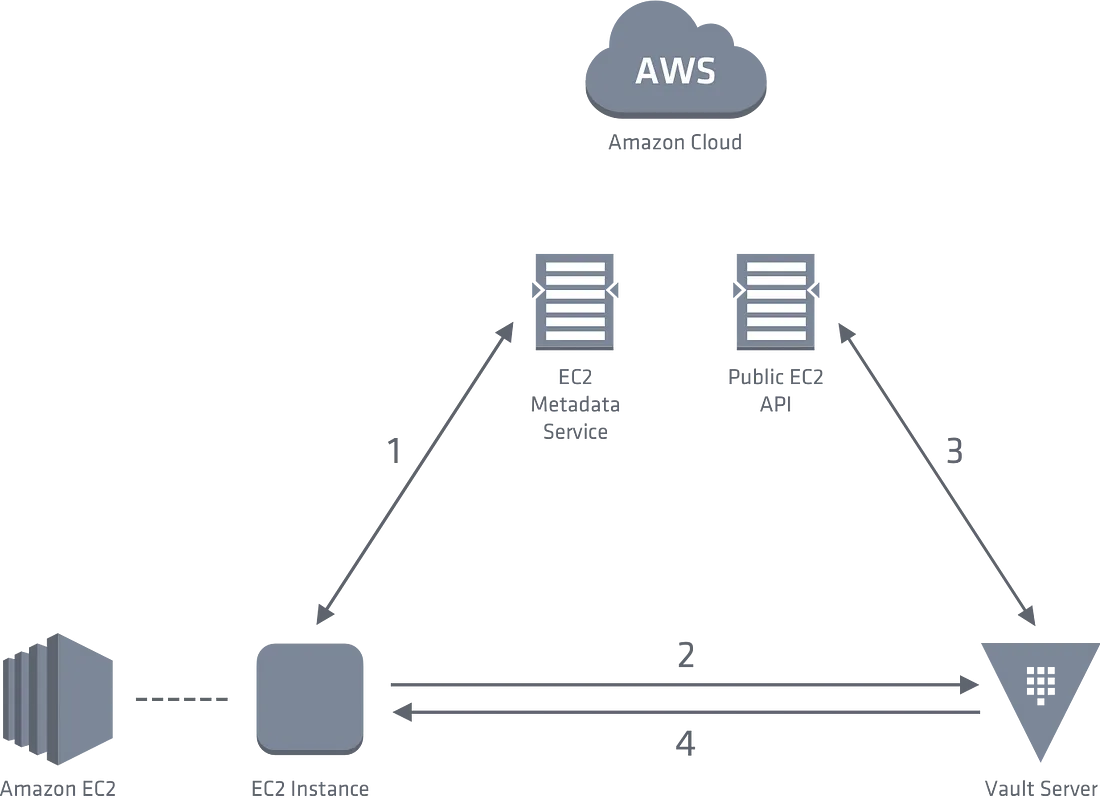

With the ec2 method, an EC2 instance leverages the Instance Metadata endpoint that AWS provides to provide an identity document to Vault. Then Vault can validate the document with AWS and decides who to authenticate based on attributes about the instance.

To use the EC2 Auth mechanism, you must first enable the AWS auth backend on an unsealed Vault server. Then you can create your Vault Role using a pre-defined policy and configure which instance metadata attributes you would like to bind to this role such as AMI id or which subnet or VPC the instance is in.

vault auth enable aws

vault policy write "example-policy" -<<EOF

path "secret/example_*" {

capabilities = ["create", "read"]

}

EOF

vault write \

auth/aws/role/example-role-name\

auth_type=ec2 \

policies=example-policy \

max_ttl=500h \

bound_ami_id=$AMI_ID

Your app server can then try to authenticate by using a PKCS7 certificate that is part of the AWS Instance Identity Document as a proof of ID. It is cryptographically signed by AWS and accessible from the instance itself via the Instance Metadata Service. After fetching this document, the client sends it along with the login request to Vault. Vault will then verify the identity of the instance against AWS, use the instance attributes to check against the pre-defined Vault Role and return a JSON object with your login information.

pkcs7=$(curl -s \

"http://169.254.169.254/latest/dynamic/instance-identity/pkcs7" | tr -d '\n')

data=$(cat <<EOF

{

"role": "example-role-name",

"pkcs7": "$pkcs7"

}

EOF

)

curl --request POST \

--fail \

--data "$data" \

"https://vault.service.consul:8200/v1/auth/aws/login"

The response from the initial login via the HTTP API will be similar to this:

{

"request_id": "eed334ef-30bc-44a4-2a7f-93ecd7ce23cd",

"lease_id": "",

"renewable": false,

"lease_duration": 0,

"data": null,

"wrap_info": null,

"warnings": [

"TTL of \"768h0m0s\" exceeded the effective max_ttl of \"500h0m0s\"; TTL value is capped accordingly"

],

"auth": {

"client_token": "0ac5b97d-9637-9c03-ce37-77565ed66b8a",

"accessor": "f56d56cf-b3a9-d77b-439e-5ea42563a62b",

"policies": [

"default",

"example-policy"

],

"token_policies": [

"default",

"example-policy"

],

"metadata": {

"account_id": "738755648600",

"ami_id": "ami-0a50e8de57a8606a7",

"instance_id": "i-0e1c0ef82afa24a7c",

"nonce": "d60cf363-eb83-3142-74c3-647445365e32",

"region": "eu-west-1",

"role": "dev-role",

"role_tag_max_ttl": "0s"

},

"lease_duration": 1800000,

"renewable": true,

"entity_id": "5051f586-eef5-064e-eca6-768b1de7d19f"

}

}

Inside this positive response from the Vault server, your client will find the client token, which is an ephemeral token necessary for performing your operations on Vault. If you wanted to, for example, read a secret from Vault, you’d pass this token as a header in your request:

curl --fail \

-H "X-Vault-Token: $client_token" \

-X GET \

"https://vault.service.consul:8200/v1/secret/example_secret"

Next steps

The are, unfortunately, some limitations to the ec2 method:

- The Instance Identity Document is always the same for a running Instance, so any rogue process on the server could access it at any time. To address this issue, Vault’s default behavior is TOFU (trust on first use). The first login attempt returns a client “nonce” that needs to be included in subsequent login attempts should you need to re-authenticate. So if the pkcs7 certificate gets compromised, attempts to login again that don’t have the nonce will be denied.

- Identity Documents are only available on EC2 Instances, but not any other AWS resources. To address this issue, we have to turn to another authenticantion method,

iamauth. This method will be useful not only for EC2 instances but also other services such as Lambda functions and ECS tasks.

We will cover the second point in the last post of this series, authenticating to Vault with an IAM user or role. In the meantime, feel free to check our open source repositories with the modules and examples on how to spin up and use Vault clusters on AWS and GCP.

Your entire infrastructure. Defined as code. In about a day. Gruntwork.io.

- No-nonsense DevOps insights

- Expert guidance

- Latest trends on IaC, automation, and DevOps

- Real-world best practices