Once a month, we send out a newsletter to all Gruntwork customers that describes all the updates we’ve made in the last month, news in the DevOps industry, and important security updates. Note that many of the links below go to private repos in the Gruntwork Infrastructure as Code Library and Reference Architecture that are only accessible to customers.

Hello Grunts,

In the last month, we added production-ready usage patterns for ELK, updated our ECS modules to do deployment checks, added support for Aurora serverless, made a large number of updates to Terratest, including a log parser that makes it easier to debug failing tests, and fixed a large number of bugs.

As always, if you have any questions or need help, email us at support@gruntwork.io!

Gruntwork Updates

ELK Usage Pattern

Motivation: Last August, we released a set of modules for running the ELK Stack (Elasticsearch, Logstash, Kibana) on top of AWS: package-elk. We got requests from customers about the best ways to use those modules, both in pre-prod and production environments.

Solution: We’ve updated our Acme Reference Architecture with two examples:

- Multi-cluster ELK deployment: This code shows how to run all the different components of the ELK stack (Elasticsearch master nodes, Elasticsearch data nodes, Logstash nodes, Kibana nodes) in separate clusters (that is, separate Auto Scaling Groups). This is the recommended deployment for production, as it lets you scale each of these clusters separately.

- Single-cluster ELK deployment: This code shows how to run all the different components of the ELK stack in a single cluster (a single Auto Scaling Group). This is the recommended deployment for pre-prod environments, as it allows you to keep the number of instances small and save money.

What to do about it: Use the code above to deploy package-elk in your own AWS accounts!

ECS service deployment checks

Motivation: When using Terraform to deploying Docker containers to ECS, the built in aws_ecs_service resource will return report “success” as soon as the container has been scheduled for deployment. However, this doesn’t check if ECS actually managed to deploy your service! So terraform apply completes successfully, and you don’t get any errors, but in reality, the container may fail to deploy due to a bug or the ECS cluster being out of resources.

Solution: All of the ecs-service modules in module-ecs will now run a separate binary as part of the deployment to verify the container is actually running before completing apply. This binary will wait for up to 10 minutes (configurable via the deployment_check_timeout_seconds input parameter) before timing out the check. Upon a check failure, the binary will output the last 5 events on the ECS service, helping you debug potential deployment failures during the terraform apply. In addition, if you setup an ALB or NLB with the service, the binary will check the ALB/NLB to verify the container is passing health checks.

What to do about it: The binary will automatically be triggered with each deploy when you update to module-ecs, v0.10.0. This binary requires a working python install to run (supports versions 2.7, 3.5, 3.6, and 3.7). If you do not have a working python install, you can get the old behavior by setting the enable_ecs_deployment_check module input to false.

Aurora serverless (plus, deletion protection)

Motivation: Customers have been asking us for the ability to use Aurora Serverless, which is an an on-demand relational database (MySQL compatible) that will start-up, shut-down, and scale on demand, without you having to provision or manage servers in advance. This is especially useful in pre-prod environments and for sporadically used apps, where you want the database to power down when not in use, so you don’t have to pay for it.

Solution: Our aurora module now supports Aurora serverless! Just set the engine_mode parameter to "serverless" and you’re good to go! You can also configure scaling settings using the new scaling_configuration_xxx parameters and enable deletion protection using the deletion_protection parameter.

What to do about it: Update to module-data-storage, v0.7.1 and give Aurora Serverless a try!

Terratest log parser helper binary for breaking out test logs

Motivation: Infrastructure tests can be slow. Therefore, you typically (a) log every action the test code takes so that you can debug issues purely from the logs, without having to re-run the slow tests and (b) run as many tests in parallel as you can. However, when you do this, all the logs get interleaved due to the concurrent nature of test execution. This makes it difficult to piece out what is going on when a test fails.

Solution: Terratest now ships a log parsing binary that can be used to piece out what is happening in automated tests written in Go. To use the binary, you must first extract the logs to a file and then feed that file to the log parser. Here’s an example:

# Run your Go tests and send the output to a file

go test | tee test-logs.txt

# Pass the file through the log parser

terratest_log_parser --testlog test-logs.txt --outputdir /tmp/logs

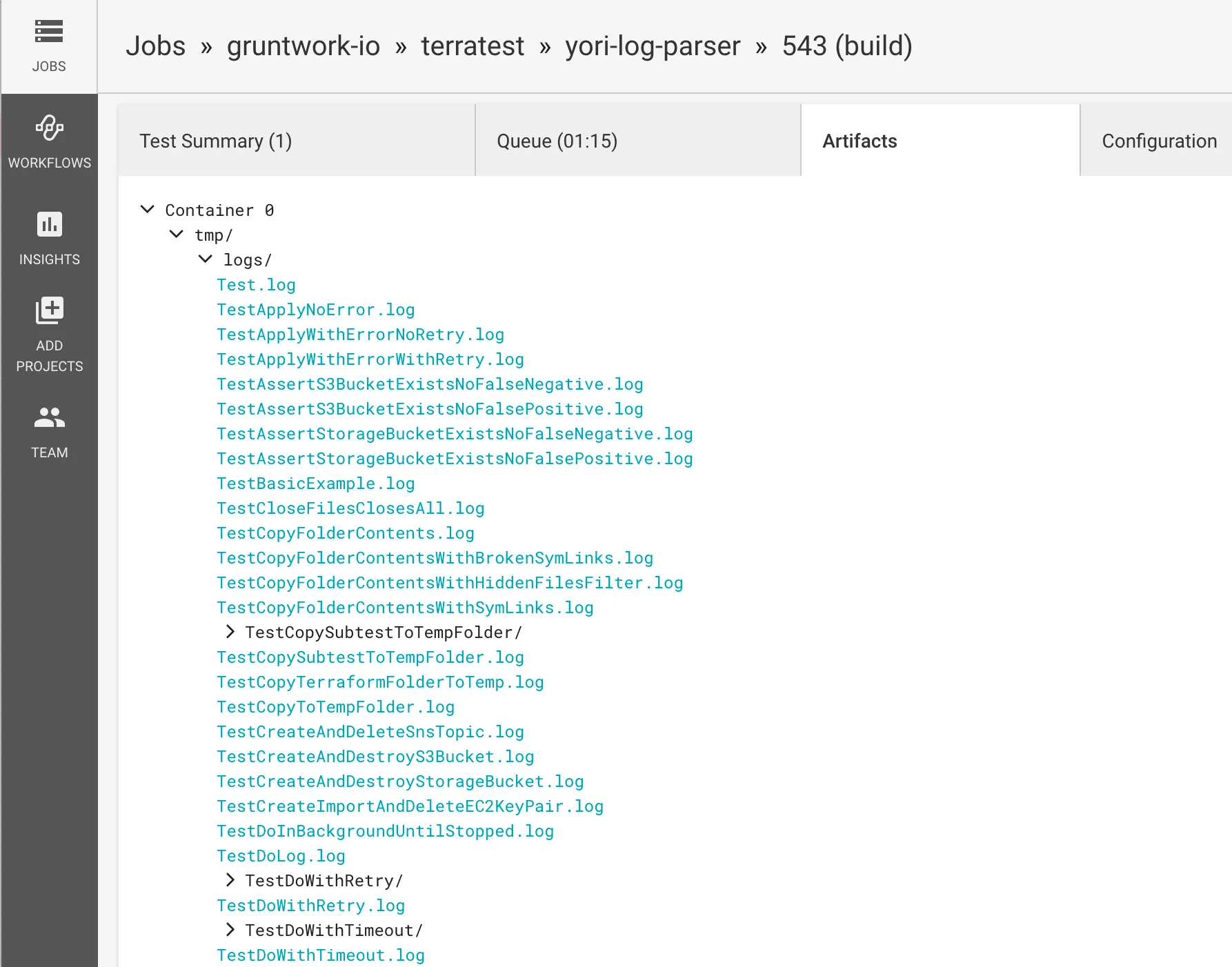

The command will then break out the interleaved entries by test, outputting each test log to its own file in a specified directory. Here’s what that output looks like in CircleCI:

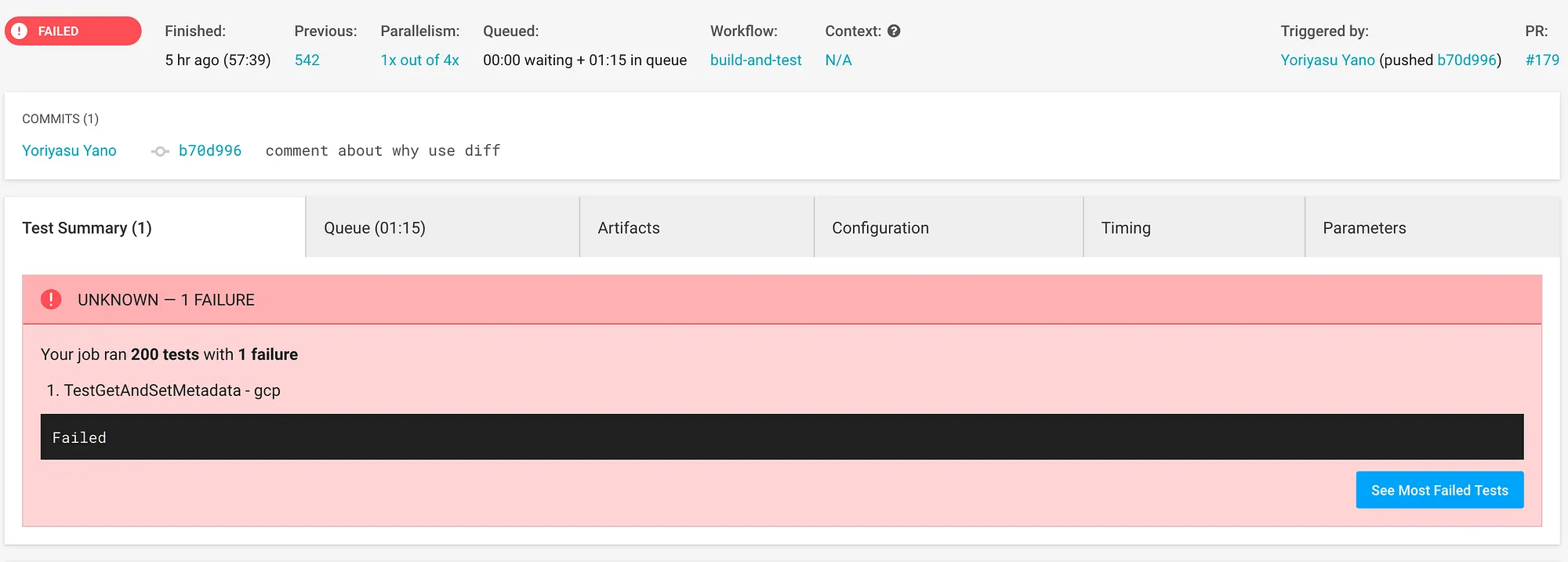

In addition, the log parser will emit a junit XML report so that it can be used by CI engines for additional insights, such as making it much easier to see which test failed:

What to do about it: You can install the helper binary using the gruntwork-installer and then take a look at the README for a walk-through of how to use the command. There is no need to upgrade your test code to terratest v0.13.8, as the binary does not depend on any updates to the tests themselves.

RDS snapshot Lambda function refactor

Motivation: We have several Lambda functions in module-data-storage that make it easy to automatically back up databases running in RDS to another AWS account on a scheduled basis. We wrote these Lambda functions a while ago, and they were using our old Lambda code, had deprecation warnings, and did not allow names to be customized, so it was possible to end up with a name that exceeded the maximum length allowed by AWS.

Solution: We’ve updated and refactored all these Lambda functions! The lambda-cleanup-snapshots, lambda-copy-shared-snapshot, lambda-create-snapshot, and lambda-share-snapshot modules now all use package-lambda under the hood (instead of the older lambda code that used to live in module-ci) and expose optional lambda_namespace and schedule_namespace parameters that you can use to completely customize all the names of resources created by these modules.

What to do about it: Update to module-data-storage, v0.7.0.

Terratest updates

- Terratest, v0.13.1: Add a new

terraform.OutputListfunction for reading and parsing lists returned byterraform output. - Terratest, v0.13.2: Add new methods for looking up info about AMIs, including

GetAmiPubliclyAccessibleandGetAccountsWithLaunchPermissionsForAmi. - Terratest, v0.13.3: Add a collection of functions that support SSHing into a GCP Compute Instance.

- Terratest, v0.13.4: Add new methods for looking up info about GCP Instance Groups, including

GetInstancesandGetPublicIps. - Terratest, v0.13.6: Add

OutputMap/OutputMapEfunctions to read and parse maps fromterraform output. - Terratest, v0.13.7: In GCP, the

GetRandomZone()function now accepts an argument forforbiddenRegions. - Terratest, v0.13.8: Added the

terratest_log_parseras described earlier in this blog post. - Terratest, v0.13.9: adds a new convenience method for building multiple packer templates concurrently. Prior to this release, if you needed to build several AMIs in your tests then you would have to write the parallelization code yourself or to run the packer builds one after another. See the release page for more details.

- Terratest, v0.13.10: Update README and help text for

terratest_log_parser. - Terratest, v0.13.11: Removes broken symlink from test fixtures, fixing error when terratest dir is copied by packer.

- Terratest, v0.13.12: Fixed two issues that affect the ability to scp files from the remote servers that you are testing. See full details here.

Open source updates

- fetch, v0.3.2: Update dependencies from

github.com/codegangsta/clito its new name,gopkg.in/urfave/cli.v1. There should be no change in behavior. - Terragrunt, v0.17.1: Fix a bug where prompts on

stdinwere not showing up correctly. - cloud-nuke, v0.1.4:

cloud-nukewill now delete ECS services and tasks. - bash-commons, v0.0.7: A new function

file_fill_templatewas added to allow replacing specific template strings in a file with actual values. - terraform-aws-consul, v0.4.1: You can now configure a service-linked role for the ASG used in the

consul-clustermodule using the new (optional)service_linked_role_arnparameter. - terraform-gcp-consul, v0.3.0, v0.3.1: Use different GCP projects for launching your cluster, fetching your compute image, and referencing your network resources.

Other updates

- package-lambda, v0.3.0: The

scheduled-lambda-jobmodule now namespaces all of its resources with the format"${var.lambda_function_name}-scheduled"instead of"${var.lambda_function_name}-scheduled-lambda-job". This makes names shorter and less likely to exceed AWS name length limits. If you wish to override the namespacing behavior, you now set a new input variable callednamespace. - module-aws-monitoring, v0.10.0: Fix the alarm name used by the

asg-disk-alarmsmodule to include the file system and mount path. This ensures that if you create multiple alarms for multiple disks on the same auto scaling groups, they each get a unique name, rather than overwriting each other. - module-asg, v0.6.18: Fix the

asg-rolling-deploymodule so the script it uses within works with either Python 2 or Python 3. - module-asg, v0.6.19: You can now launch two or more

server-groupmodules in a sequential order instead of only in parallel. This is useful when creating a collection of clusters where Cluster A may depend on Cluster B. - package-zookeeper, v0.4.8: Update to

module-asgversionv0.6.18so that the rolling deploy script works with either Python 2 or 3. Upgrade Oracle JDK installer to version8u192-b12. - package-zookeeper, v0.4.9: Update

zookeeper_serversto use the latestmodule-asgand then expose therolling_deployment_doneas an output so that other modules can be launched after this module deploys. - module-ci, v0.13.3: Update the

git-add-commit-pushscript to check there are files staged for commit before trying to commit. - package-kafka, 0.4.2: The

run-kafkascript now exposes params to configure SSL protocols and ciphers, SASL authentication, ACLs, ZooKeeper chroot, and JMX. The Kafka Connect, Schema Registry, and REST Proxy modules now allow you to configure a keystore for validating SSL connections. - module-ecs, v0.9.0: The

ecs-service-with-discoverymodule will now create an IAM role for the ECS task that can be extended with custom policies, similar to theecs-servicemodule. Note: this is a backwards incompatible change. Refer to the release notes for more information.

DevOps News

AWS Lambda functions can now run for up to 15 minutes!

What happened: AWS has announced that Lambda functions can now run for up to 15 minutes!

Why it matters: Lambda functions used to be limited to a max runtime of 5 minutes. This made them useful for short, one-off tasks, but any workload that took longer than that would have to be executed elsewhere (e.g., in an ECS Cluster). The time limit has now been increased to 15 minutes, which means you can use Lambda functions for an even larger variety of use cases.

What to do about it: This feature is available everywhere immediately. Simply set the max timeout to your lambda function to 15 minutes (900 seconds), and you’ll be good to go!

Terraform Enterprise changes

What happened: At HashiConf 2018, the HashiCorp team announced some major changes with Terraform Enterprise:

- Three tiers: a free tier for individuals and small teams, a business tier for small to medium companies, and an enterprise tier for large companies.

- Remote state: Remote Terraform state management within Terraform Enterprise is now free for teams of all sizes.

- Remote plan and apply: For enterprise customers, Terraform can now run your

planandapplycommands remotely, in the Terraform Enterprise SaaS product (rather than on your own computer), while still streaming logs and data back to your own computer. - Atlantis: The Atlantis team is joining HashiCorp and will be integrating their work into Terraform Enterprise.

Why it matters: It looks like the HashiCorp team is making a push for all Terraform users to move to Terraform Enterprise (at one of the three tiers) to simplify collaboration and team workflows.

What to do about it: For now, the only thing you can do is to sign up for a waitlist for the free remote state management functionality. However, keep your eye on this space, as new functionality will likely be rolling out soon.

Amazon ECS-optimized Linux 2 now available

What happened: Amazon’s ECS-optimized AMI now supports Amazon Linux 2.

Why it matters: Amazon Linux 2 includes systemd, newer versions of the Linux kernel, C library, compiler, and tools, and access to more/newer software packages. It’s also the version of Amazon Linux that will get long-term support (at least through 2023).

What to do about it: If you are using ECS, you may wish to update your Packer templates to use the new ECS-optimized AMIs. You can find the AMI name pattern to put into the source_ami_filter param in your Packer template, as well as the latest AMI IDs, on this page. Note that you need to use the following versions of Gruntwork modules to get Amazon Linux 2 support:

- bash-commons: at least v0.0.3 (latest is v0.0.7)

- module-ecs: Any version should work

- module-aws-monitoring: at least v0.9.2 (latest is v0.10.0)

- gruntkms: at least v0.0.7 (latest is v0.0.7)

- module-security: at least v0.9.0 (latest is v0.15.3). Note, to install

fail2banon Amazon ECS-optimized Linux 2, you’ll need to installyum-utilsfirst (e.g., addsudo yum install -y yum-utilsearlier in your Packer template).

Once you’ve built a new AMI, follow this guide to roll it out across your ECS cluster.

- No-nonsense DevOps insights

- Expert guidance

- Latest trends on IaC, automation, and DevOps

- Real-world best practices